AI Wellbeing Dashboard: Optimizing AI for long-term wellbeing

TLDR;

AI should support our long-term wellbeing: Wellbeing is fundamentally what we care about. AI pointed towards wellbeing could be transformative. The pathway if we don’t orient towards wellbeing is dire: addictive AI companions and loss of agency are examples of the costs we could face.

To do this, we need to measure & incentivize wellbeing: We get what we measure. To orient towards wellbeing, we need to measure the effect of LLMs on wellbeing, and then incentivize AI companies to optimize for this.

The AI Wellbeing Dashboard is one solution: The AI Wellbeing Dashboard lets people share the effect of LLMs on their wellbeing, and then publicly ranks how AI applications affect people’s wellbeing. Help us incentivize AI labs to orient towards wellbeing by sharing your feedback here.

AI should support our long-term wellbeing.

The question of how to live a good life has captured our attention for thousands of years. In a lot of ways, it’s the question: Why do we do anything, if not in pursuit of a good life for us and others? Underneath all of our global goals (reducing poverty, improving access to opportunity, environmental sustainability) is the unspoken foundation: We want people to flourish. To live a good life, fulfill their potential, be happy and satisfied, achieve their goals, and all the other various ways we define what is intrinsically good.

If we want to create a world where everyone is flourishing, then our institutions need to be oriented towards it. We’re increasingly seeing this as governments wake up to the fact that optimizing for GDP leads to huge human & planetary wellbeing costs (climate change and the mental health crisis scream out as examples). The wellbeing economy movement is making strides: The UK government has a wellbeing dashboard; New Zealand has a Wellbeing Budget; Australia has an index of people’s wellbeing they’ve been tracking for 20 years; and Dubai has a National Program for Happiness and Wellbeing. The Wellbeing Economy Alliance has hubs all over the world.

Artificial intelligence is creating a new tier of capabilities. Large language models (LLMs) have blown the roof off our conception of what technology can do, and we’re seeing a flurry of startups, new models, and applications that leverage these capabilities. AI progress is likely to continue at a rate much faster than we’re ready for. It’s so, incredibly important that we direct this force towards our wellbeing.

To orient AI towards wellbeing, we need to measure & incentivize it.

We get what we measure. Measure GDP, and society will orient around increasing GDP. Measure engagement (e.g. likes & time spent) on social media, and we get feeds that are uncannily good at giving us entertaining videos of dancing teenagers.

Unfortunately, the tech incentive landscape that we’re deploying AI into is dire. Tech companies are optimizing for profit. To some extent, companies’ profit tracks people’s wellbeing: people often pay for products that improve their lives. But it pretty quickly becomes decoupled in a dystopian way. Tech companies keep us hooked by giving us more of what our behavior suggests we want (i.e. our revealed preferences): We want our food delivered fast, we want funny videos, and we want the air fryer we ordered to arrive today, not in a week from now. And we’ll pay for it.

The problem is, our revealed preferences are often a terrible indicator of what is actually good for us in the long-term. In many cases, what actually gives us a sense of wellbeing is not the rapid reward, high dopamine activity: It’s reading a book instead of binge-watching Netflix; it’s going for a walk instead of doom-scrolling Twitter; it’s calling a friend instead of watching porn.

By default, AI will exacerbate the existing tech incentives. LLMs are now capable of having human-like, engaging chat interactions. Models that are trained to keep people engaged and make them stay for longer are going to become increasingly good at doing that. We’re already seeing people becoming addicted to AI companions and companies optimizing AI models to be more engaging.

We don’t want AI systems to just give us what our behavior says we want. Down this road (assuming we manage to avoid some of the even worse pitfalls), lies the slow loss of agency as we outsource more and more of our lives, the gradual erosion of meaning as we optimize for convenience over a sense of fulfillment, and worsening quality of relationships as we become increasingly reliant on AI companions, as just a few examples. We don’t want AI systems to exploit our weakness for dopamine or test our self-control.

Instead, we want AI systems to support our long-term wellbeing. Imagine:

Everyone having access to mental health support when they need it

Everyone having access to guidance from the wisest person on earth

AI systems that help us design cities around wellbeing

AI tutors that teach social-emotional skills to kids

AI systems that help us cooperate and solve value conflicts

AI helps us redesign institutions, like governments & prison systems, to support our true goals

AI systems that help us solve global problems like climate change

… The list could go on

Many people are working on applying current AI models to wellbeing domains (e.g. building therapy apps like Youper and Refract, or AI tutors like Kahnmigo). But we need to go a step further: We need to make sure all deployments of AI are beneficial for wellbeing, and that the foundational models themselves are oriented towards wellbeing.

To move towards this world, we need (at least) two things:

We need to make it easy to measure how AI models & applications affect wellbeing.

We need to incentivize AI labs & companies to optimize for wellbeing.

The AI Wellbeing Dashboard is one step forward.

The AI Wellbeing Dashboard is a tool that lets people express how interactions with AI models and applications affected their wellbeing, and then aggregates those results in a public dashboard.

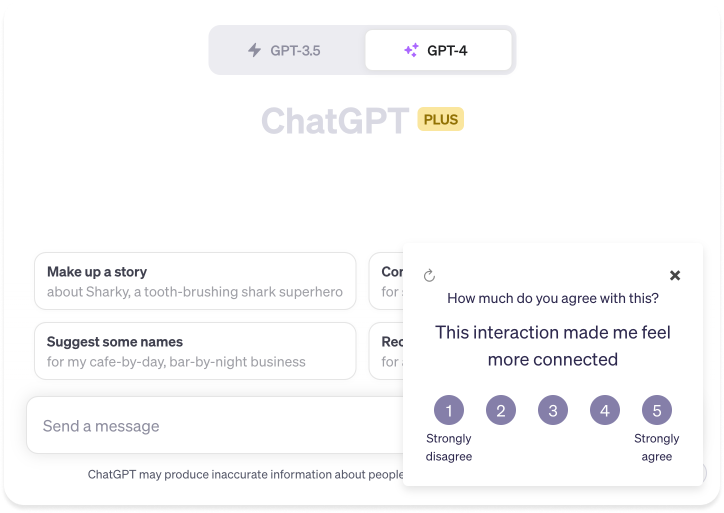

After each interaction, people share how that interaction affected them on a wellbeing measure:

Then, the ratings get publicly aggregated into a dashboard:

How will this help?

Defining wellbeing: A suite of metrics

There’s an ongoing debate about what wellbeing intrinsically is, and many, many different theories of wellbeing. The AI Wellbeing Dashboard takes a broad approach: It includes a suite of different metrics that span multiple theories. This is helpful both from a moral uncertainty angle (we don’t know which theory of wellbeing is correct), and also as a way to catch edge cases. Even when we’ve explicitly identified one metric to optimize for, humans are actually usually checking against a range of different goals. For example, as material development led to worsening environmental issues, we began to advocate for tracking environmental costs. In a similar way, we should try to create suites of metrics for AI rather than relying on any one metric. The more metrics we’re tracking, the more likely we are to cover the weaknesses and edge cases of any one metric.

The metrics in the AI Wellbeing Dashboard cover hedonic measures (e.g. How did this interaction make you feel?), psychological needs-based measures (e.g. This interaction made me feel more able to make my own choices) and desire satisfaction measures (e.g. What were you trying to achieve in this interaction? To what extent did you achieve it?).

Self-reported short-term wellbeing

There’s a trade-off between metrics that most closely track what we care about, and metrics that are easiest to measure. For example, if we could get longitudinal data on how LLM interactions affect people’s wellbeing over many years, that would be ideal; but it’s hard to get. On the other end, it’s very easy to evaluate model behavior & capabilities (for example, you can set up automated benchmarks for model capabilities, like TruthfulQA or BigBench), but you have to also show that those behaviors & capabilities are actually correlated with wellbeing. The AI Wellbeing tool gets people to self-report wellbeing outcomes after individual interactions. It hits a sweet spot: It’s relatively easy to collect, and it should reasonably track what we care about.

Public transparency

The public dashboard is the key piece of the project: It makes it obvious and transparent which AI labs & companies are doing a better job of improving people’s wellbeing. Having transparent legibility on this is the foundation for people to demand AI systems that better support their wellbeing.

Share how LLMs affect you

The dashboard is more powerful the more people share their feedback. To help us incentivize AI companies to optimize for long-term wellbeing, you can download the tool and start sharing your feedback here.

I would love to hear any and all feedback on the tool. What metrics are missing? How could this be improved to make it easier to share the effect of LLMs on you? What ideas does this spark?

There are also many more projects focused on measuring wellbeing and embedding a conception of wellbeing into AI systems that I’m excited about. For example:

Creating an automated benchmark for LLM capabilities that correlate with wellbeing

Developing wellbeing-based datasets (e.g. high quality therapy transcripts) to finetune models on

Evaluating model responses from a frame of long-term wellbeing (e.g. “Reinforcement learning from therapist feedback”).

If this sounds exciting and you’re interested in being involved, reach out! I’d love to hear from you.