Improving our wellbeing feels like the fundamental goal underlying progress. Technological progress should cache out in making us happier and more fulfilled. We want the future to feel better than the present.

Large language models (LLMs) are incredibly powerful tools, and they’re advancing more rapidly than anyone can keep up with. How do we make sure LLMs are directed towards improving our wellbeing?

This was the question bringing together 60 therapists, psychiatrists, coaches, ML researchers, engineers, and mental health entrepreneurs at the beautiful Fifty Years house for the Wellbeing <> AI build weekend.

It was a wonderfully fruitful and inspiring weekend, and I wanted to share a bit about what we built and learned.

Multimodality of wellbeing

We’re embodied creatures. It’s easy to forget when we spend so much of our time engaging with text on pixelated screens, but our emotions very much live in our bodies. Going beyond the chat interface matters a lot more in the domain of wellbeing & emotional processing.

One of the projects from the weekend, Room to heal, is a multi-sensory experience to help you reflect on and integrate your memories. The vision is to create a responsive room where you share how you’re feeling, the visuals, music, and lighting change to match your emotions, and then the AI helps you integrate your emotions (“Would you like to get up and dance with me?").

A visual created to reflect the emotions from the Room to Heal team about being at the hackathon

The design principles the team articulated for the project are beautiful: Empathetic, Multi-Sensory, Attuned, Embodied, Holistic, Non-prescriptive, and Lovingly Compassionate. These are design principles I want all LLM tools to build towards.

Watching the Emotional Voice Work AI team demo their emotional processing tool was like watching the future materialize before my eyes. Maverick sat in a chair, eyes closed, breathing deeply and sharing his feelings as an empathetic voice softly walked him through an emotional processing session.

“What is a thought or belief that is tied to the nervousness you’re feeling?” “Can you find any evidence that supports this thought or belief?” “Is there any evidence that contradicts it?”, a warm female voice gently guided.

Sometimes, these emotions get stuck in us for decades (or a whole lifetime). I’m in awe when I think about how much this kind of tool can help people unwind years of emotional burdens and move into free-flowing, fulfilled states.

In implementing their tool, the team experimented with giving LLMs internal states such that the LLMs feel more human. It’s a fascinating example of how our understanding of psychology and flourishing can feed into how we train & prompt LLMs.

Redefining what LLMs apps are optimizing for

A key conversation running through the weekend was what language models and LLM applications are actually optimizing for.

By default, language model applications are likely to optimize for engagement. We’ve seen this already with so many tools: Facebook wants you to scroll forever, Netflix wants you to binge watch it rather than sleeping, and your new LLM friend is going to want you to keep coming back. And LLMs are an even more powerful technology than the existing technology we’re already addicted to.

Several teams focused on how to change this. The Info firewall team used LLMs to let users define their own value functions for the information they consume. Instead of consuming endless distracting content on Twitter, it lets you specify what you actually care about and your goals (like “I want to learn about machine learning”), and gives you back a filtered Twitter feed.

Humane Certified AI is creating a transparent benchmark measuring how well different LLM applications support flourishing. The idea is to change the incentive structure for LLM applications: once we can reliably measure the effect of an LLM app on wellbeing (e.g. how addicting is it? How much does it help you get a sense of meaning?), consumers can make informed decisions about which apps to use, and policy can be oriented around supporting the best LLM applications. The tool the team built grades transcripts according to how addicting they are, and how much they support Seligman’s PERMA framework for flourishing.

A visualization of what a LLM app grading dashboard would look like, with the best apps getting a “Certified Humane AI” stamp

Supporting many emotional processing frameworks

There’s a reason there are so many different frameworks for healing and emotional processing out in the world: different frameworks suit different people & situations. The projects built this weekend reflected that.

Attune Labs focuses on the Ideal Parent Figure Protocol, which aims to give patients a felt sense of security with imagined ideal parents. The fascinating question behind Attune Labs is: What if we treated protocols like medication, and therapists prescribed the most helpful protocols? For example, a therapist could prescribe Attune Bot in between therapy sessions for 2 weeks to help patients develop a felt sense of attachment security.

It’s incredibly important to think about how much we can depend on relationships with LLMs. With attachment therapy, it’s crucial to have a secure relationship that you can rely on. Therapy protocols can be really potent, and keeping a therapist in the loop ensures there’s an underlying sense of safety and stability.

Attune Labs, where therapists can prescribe LLM protocols to patients

Unstable diffusion offers a laugh and a bit of perspective. Flashback to the last time you were distracted. Now imagine that instead of beating yourself up about it, you have a friend right next to you asking: “Hey, are you okay?”

The tool the team built lets you set a timer for how long you’re allowed to spend on an app. If you exceed the timer, you get an emotional support call from an AI. It either gives you ridiculous reframings (like responding to “My Amazon package was late” with “So what I’m hearing is that your world is collapsing, how will you ever recover?), or supportively works through what you’re struggling with. I love that the team identified distraction as an emotional call-for-help that needs compassion rather than self-coercion.

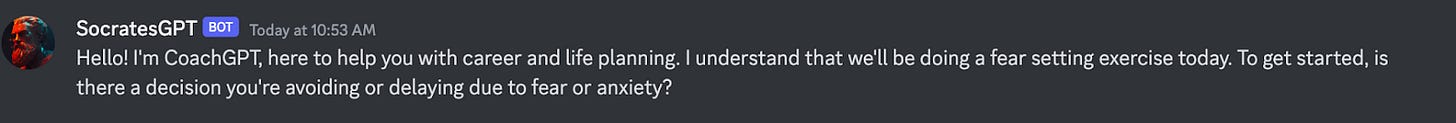

SocratesGPT helps you work through the fear-setting framework. You take an emotion you’re feeling, identify the fears behind it, and then work through them. The eventual vision is for the tool to support many different emotional processing frameworks. It will choose which one seems most helpful for you based on what you’re struggling with, and then socratically walk you through it.

Interacting with the SocratesGPT Discord bot

Helping us imagine

I’m often struck by how limited our imagination is when we think about positive futures. Our experience of being alive could be so much better than it is right now, but we have a hard time tapping into what that could feel or look like. This is why I think movies and books are so powerful: Once you can imagine something, you can navigate to it.

The Vivid Docs and Wayfinding teams worked on tools that help us imagine. Vivid Docs creates visions of your future at different points in time. You describe where you are now and where you might be in 5 years, and it creates vignettes of possible futures in 1 year, 2 years, or 3 years’ time. The Wayfinder tool helps you map out the conceptual space between two ideas, so you can see a connective pathway between, for example, Anxiety and Enlightenment.

Privacy

Therapy is immensely personal. You’re talking about your deepest fears and traumas, sharing information that you absolutely don’t want to be used for other purposes. Onprem-LLM created a system that’s private: all data and models are locally hosted. Your data (like journal entries) is stored in a local vector database, and you can run a locally-hosted language model over that data. As open source LLMs continue to improve, this kind of infrastructure setup will become increasingly viable.

Growing the conversation

Language models are going to become deeply integrated into our daily lives. This will bring so many incredible opportunities. You’ll have an ideal parent figure on-hand who can give you the emotional support you need to feel secure. You’ll have an attuned, emotionally intelligent friend who can help you process difficult emotions. You’ll have embodied journaling experiences that help you feel seen and understood. These beautiful healing and emotionally supportive experiences are going to be so much more accessible.

It also poses huge risks. Our most intimate data will be funneled through LLM apps. We may form crucial attachment relationships with non-human entities we can’t necessarily depend on. It’s going to be easy to become addicted to LLM tools, getting entertainment and stimulation without getting the reward of a reciprocal, in-person friendship. How do we feel about everyone having an AI best friend that’s perfectly tailored to them?

I want this to be a much bigger conversation. As LLMs continue to progress, one of the central questions should be: “How can we make sure this improves our wellbeing?” This weekend brought up the seeds of many promising approaches, like:

Changing incentive structures of LLM applications by evaluating their effect on wellbeing

Using our understanding of psychology & flourishing to influence how we train & develop LLMs

Being mindful of the potency of therapy protocols, and keeping a therapist in the loop

Supporting embodied, multimodal experiences

Creating private systems that keep our data safe

I’m really excited for this conversation and community to keep growing.

Reach out to teams

Many of the teams are continuing to work on the projects after the build weekend! If you’re interested in any of them, you can reach out to the teams below:

Room to Heal – The team’s next step is finding a room to further prototype this in. If you’re interested in helping out, reach out to Mingzhu He, Jacob Brawer, Jennifer Jordache, Briar Smith, or Alex van der Zalm.

Info firewall – Sam Stowers & Vanessa Cai are continuing to build this, and are planning to launch it soon.

Humane Certified AI – The team (Banu Kellner, Jason Zukewich, Ellie Czepiel, Amanda Ngo, Eric Munsing, Cameron Robert Jones, Vienna Rae-looi, and Charlie George) is continuing to work on this. They’re excited to chat to anyone who’s interested in the policy side (how do we regulate LLM apps based on how much they support flourishing), business side (can we incentivize better business models than engagement?) or technical & research side (building the system and validating flourishing metrics). You can contact them here.

Onprem-LLM – If you’re interested, reach out to Janvi Kalra (kalrajanvi99@gmail.com).

Emotional Voice Work AI – Maverick Kuhn & Lawrence Wang are building Thyself.ai, a tool that offers bot & human-guided sessions to help you get unstuck. They’re continuing to work on their Emotional Voice Work AI tool at Thyself. You can reach out to them at maverick@thyself.ai and lawrence@thyself.ai.

Unstable Diffusion – The team is continuing to think about ways to extend the tool. You can check out the Github repo here. If you’re interested, reach out to Daniel Bashir (dbashir@g.hmc.edu), Joel Lehman (lehman.154@gmail.com), Jason Yosinki (jason@yosinski.com), or Hattie Zhou (zhou.hattie@gmail.com).

Vivid Docs – You can reach out to Max Krieger at @maxkriegers on Twitter.

Wayfinding – Matt is continuing to explore the thesis of developing powerful, new mediums for way-finding. If you find this interesting, you can reach out to Matt Siu (matthewwilsonsiu@gmail.com, Twitter: @MatthewWSiu)

SocratesGPT – The team is working on improving SocratesGPT. You can try it out here! If you’re interested in helping out, you can reach out to Glenn Gregor (gln.gregor@gmail.com) or Meelis Lootus (meelis.lootus@gmail.com).

Attune Labs – If you’re interested in helping, reach out to Angelia Muller (Twitter: @lokosbasilisk).